I’m trying to integrate huggingface endpoint with LangChain project using HuggingFaceEndPoint proviede by LangChain.

llm = HuggingFaceEndpoint(

repo_id="deepseek-ai/Janus-Pro-7B",

task="image-text-to-text",

max_new_tokens=512,

do_sample=False,

repetition_penalty=1.03

)

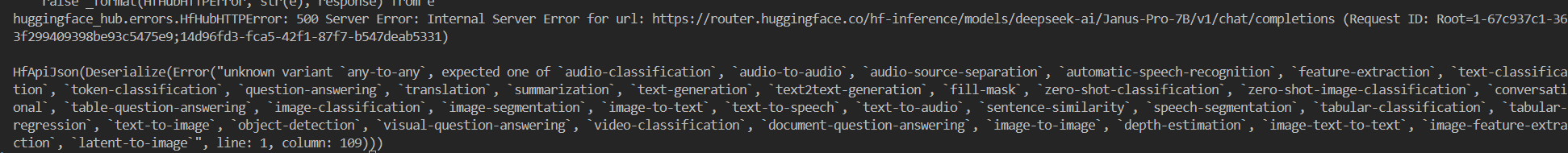

But it returns with 500 Server Error, and unknown variant ‘any-to-any’. I’m using task like ‘image-text-to-text’ and ‘image-to-text’ and they have same return value.

Not only Janus-Pro-7B can’t run, other VLM like Qwen2.5-VL-7B-Instruct and glm-4v-9b can’t run. I suspect this HuggingFaceEndpoint could not run VLM.